What is Implementation Science?

What exactly is implementation science, how does it fit in with other research, and why is it important?

Defining Implementation Science

Put simply, implementation science is the field of study that focuses on how to apply evidence in practice. When we know something works, the question is: how do we make sure it reaches the people who would benefit from it?

There are a variety of more specific definitions, each of which builds off the above in their own way. You’ll also sometimes see names like “dissemination and implementation” or “knowledge translation”, which are related fields that often overlap with implementation science (and sometimes are literally the same).

Definitions of Implementation Science

“Implementation science (IS) is the study of methods to promote the adoption and integration of evidence-based practices, interventions, and policies into routine health care and public health settings to improve our impact on population health”

“Implementation research (IR) is the systematic approach to understanding and addressing barriers to effective and quality implementation of health interventions, strategies and policies”

“Implementation science is the study of methods to increase the uptake of evidence-based interventions in real world settings to improve individual and population health.”

“Implementation science is the scientific study of methods and strategies that facilitate the uptake of evidence-based practice and research into regular use by practitioners and policymakers”

“...the scientific study of methods to promote the uptake of research findings into routine healthcare in clinical, organizational, or policy contexts.”

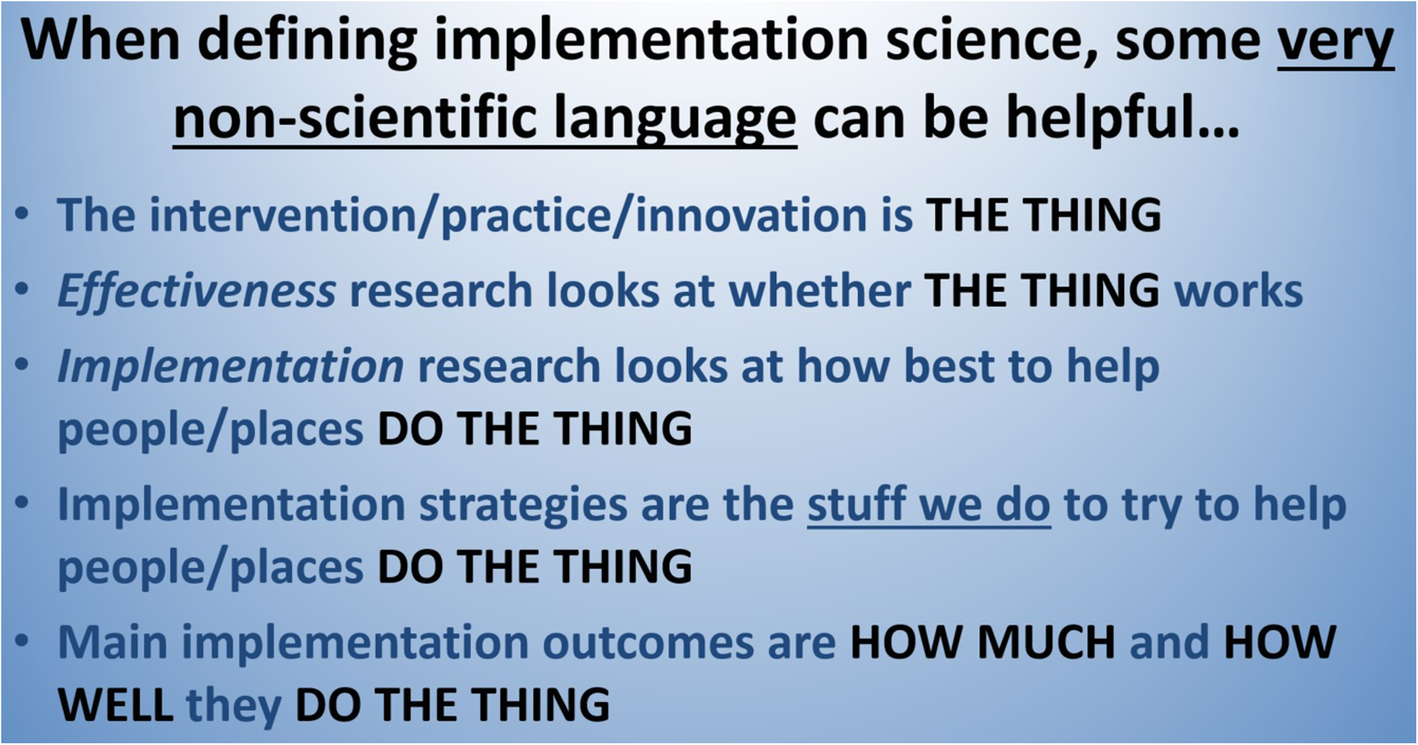

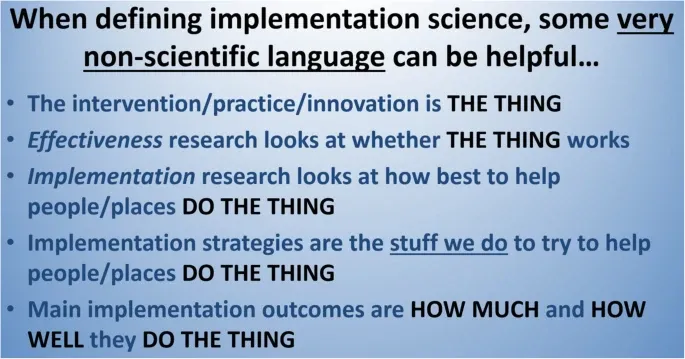

This terminology can all get a little confusing. Luckily, implementation scientists being implementation scientists, many ways of explaining implementation science have been created, one of which by Dr. Curran explains what implementation science is in simple terms:

Essentially, implementation science is the systematic study of methods to increase the uptake of evidence-based practices into real world settings. While much of the time it focuses on health practices, it can also focus on things like educational or social practices, and incorporates methods and research from a variety of disciplines such as public health, sociology, economics, psychology, communications, and more.

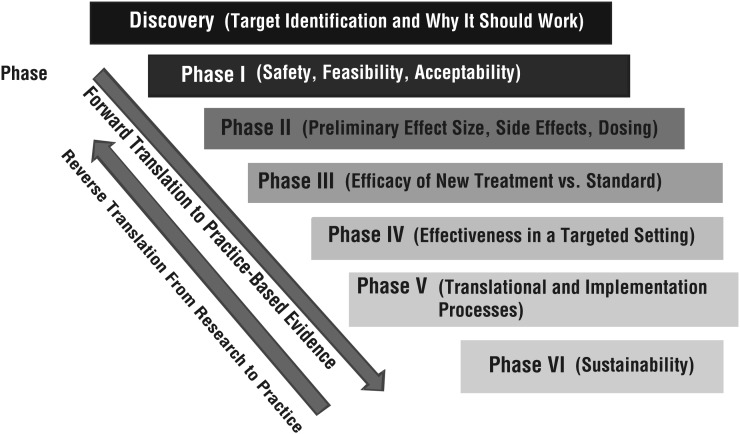

How does implementation science fit into the research pipeline?

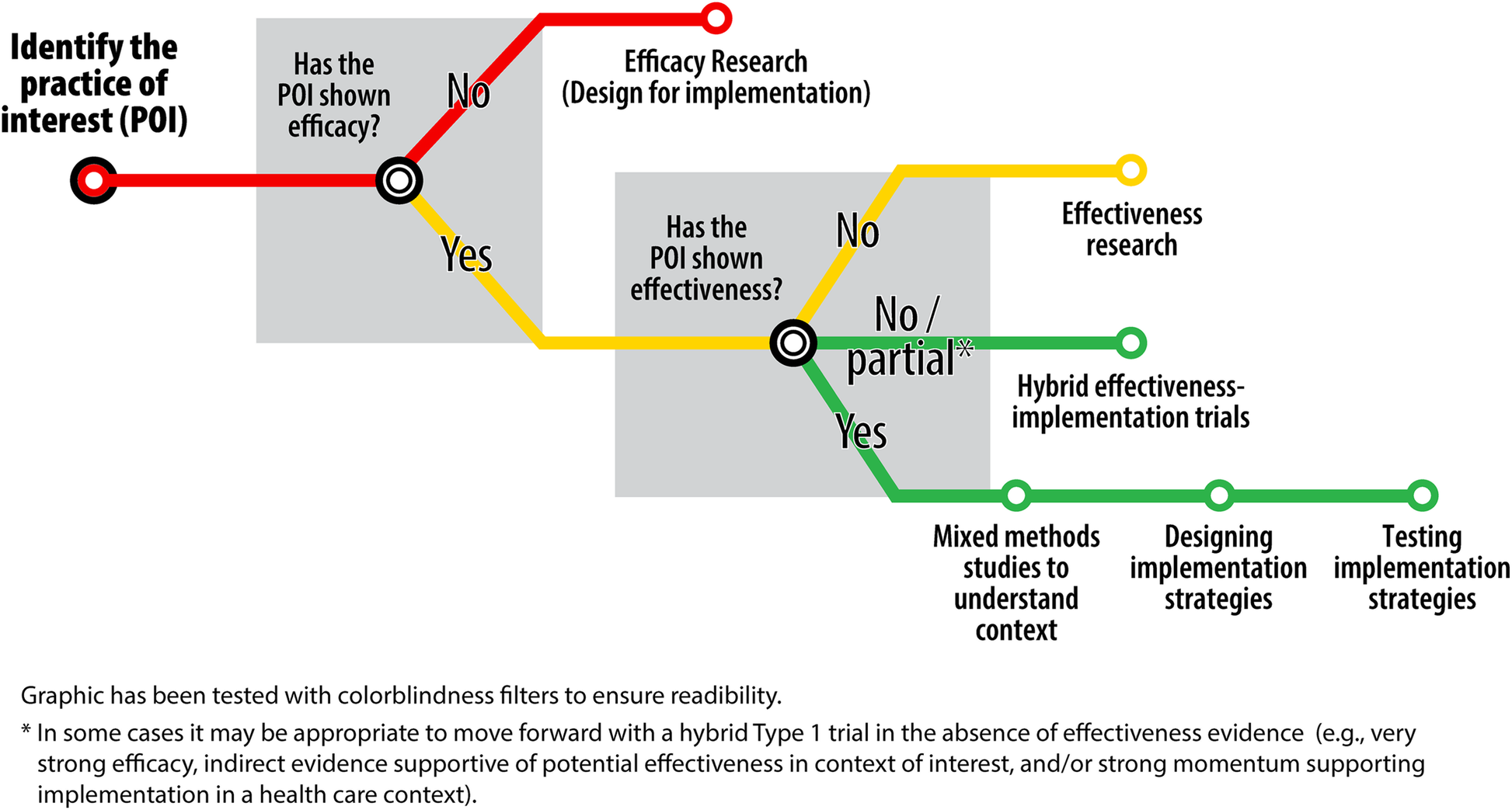

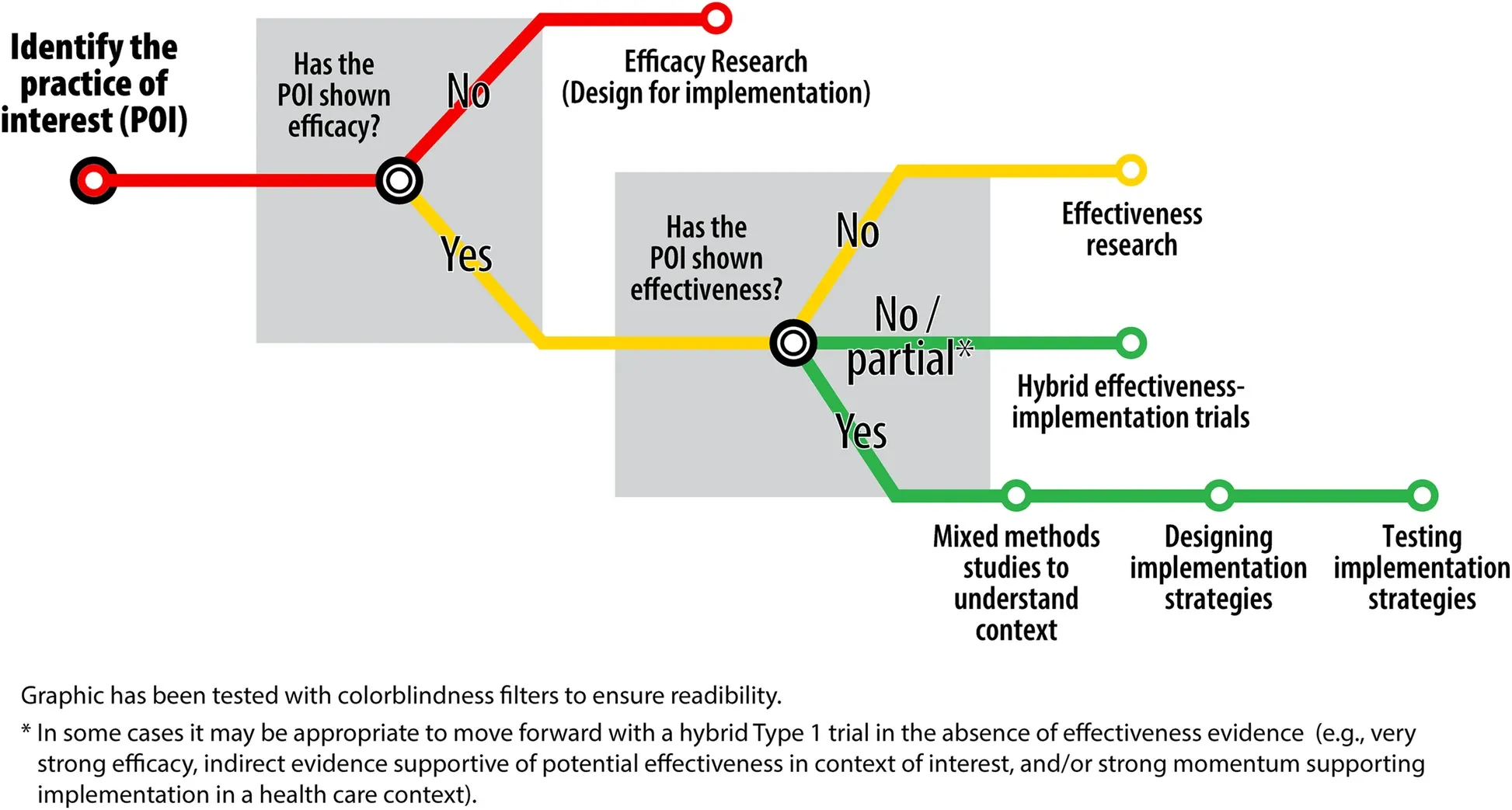

In order to situate the field within the research process in more detail, it can be helpful to think about it in terms of this “subway map” of research created by Lane-Fall, Curran, and Beidas:

The “practice of interest” is the aforementioned “thing”, that is, whatever intervention you want to be implemented. These practices can encompass anything from updating guidelines to outreach programs to new medications, and are typically focused on improving delivery of services or improving outcomes. However, you shouldn’t really be implementing anything unless you know that it works, which is where the ideas of efficacy and effectiveness come in.

When you have a practice of interest, you have to determine if it has shown “efficacy”, which basically means whether or not it works in ideal settings. If you’ve ever seen a flyer asking for research participants for a lab study, often what they’re doing is efficacy research. This kind of research will do things like take place in controlled environments, be carried out by experts, and only be tested on people who meet certain criteria (right age, no other health problems etc.). Usually, these types of trials don’t focus that much on implementation (because they might find the practice doesn't work well), but you should be thinking about implementation in the background. After all, there’s no point testing an intervention that will never be able to be implemented, so when the practice is being refined it helps to keep that in mind.

If the practice of interest has shown efficacy, then you have to ask if it has shown “effectiveness”, which is whether or not it works in the real world. All the controlled settings and exclusive criteria from the efficacy studies are done away with, and instead the people who are going to receive the practice receive it in the method and setting that they normally would. If there’s absolutely no preexisting evidence of effectiveness, you’ll carry out effectiveness research. Sometimes, there is some evidence of effectiveness, but maybe not in a particular setting or with a particular group of people. In those cases, you might do what’s called a “hybrid effectiveness-implementation trial”, which looks at effectiveness (if it works in the real world) and implementation (how do we get it to be used) at the same time. Think about when the first self-driving cars were being rolled out, for example, and they were being tested by regular people in certain cities. They weren’t just testing whether or not the cars would work in the city, but also if, who, when, why, and how people would use them.

Sometimes, there’s loads of evidence of effectiveness, in which case you might do an implementation-focused study. These studies will often focus on a few key implementation science concepts, like barriers (obstacles that stop things from being implemented) and facilitators (things that help implementation), or testing implementation strategies (the things you can do to facilitate implementation, such as training programs or creating financial incentives).

Implementation science is involved in all stages of the research process, but particularly in the green sections of the graphic above, when there is already evidence of efficacy and effectiveness. This is why you’ll often see implementation scientists talk about implementing “evidence-based interventions”. If there’s one thing you need to take away from this section, it’s that implementation science is primarily aimed at helping the uptake and adoption of research-based practices (as opposed to assessing the effectiveness or impact of research).

Why is implementation science important?

Implementation scientists will often talk about the “research-practice gap”. This refers to the gap between research being produced and that research being used in routine practice. Implementation science as a field essentially tries to reduce that gap. But how big a problem is it?

If you ask an implementation scientist, there’s a pretty good chance they’ll hit you with the following statistic: that on average it takes 17 years for evidence to become clinical practice. Similar lengths of time have been found in repeated studies. Often, studies don’t even make it to practice at all. For instance, fewer than 14% of behavioral interventions are incorporated into clinical practice. On average, Americans receive only about half of the recommended care processes.

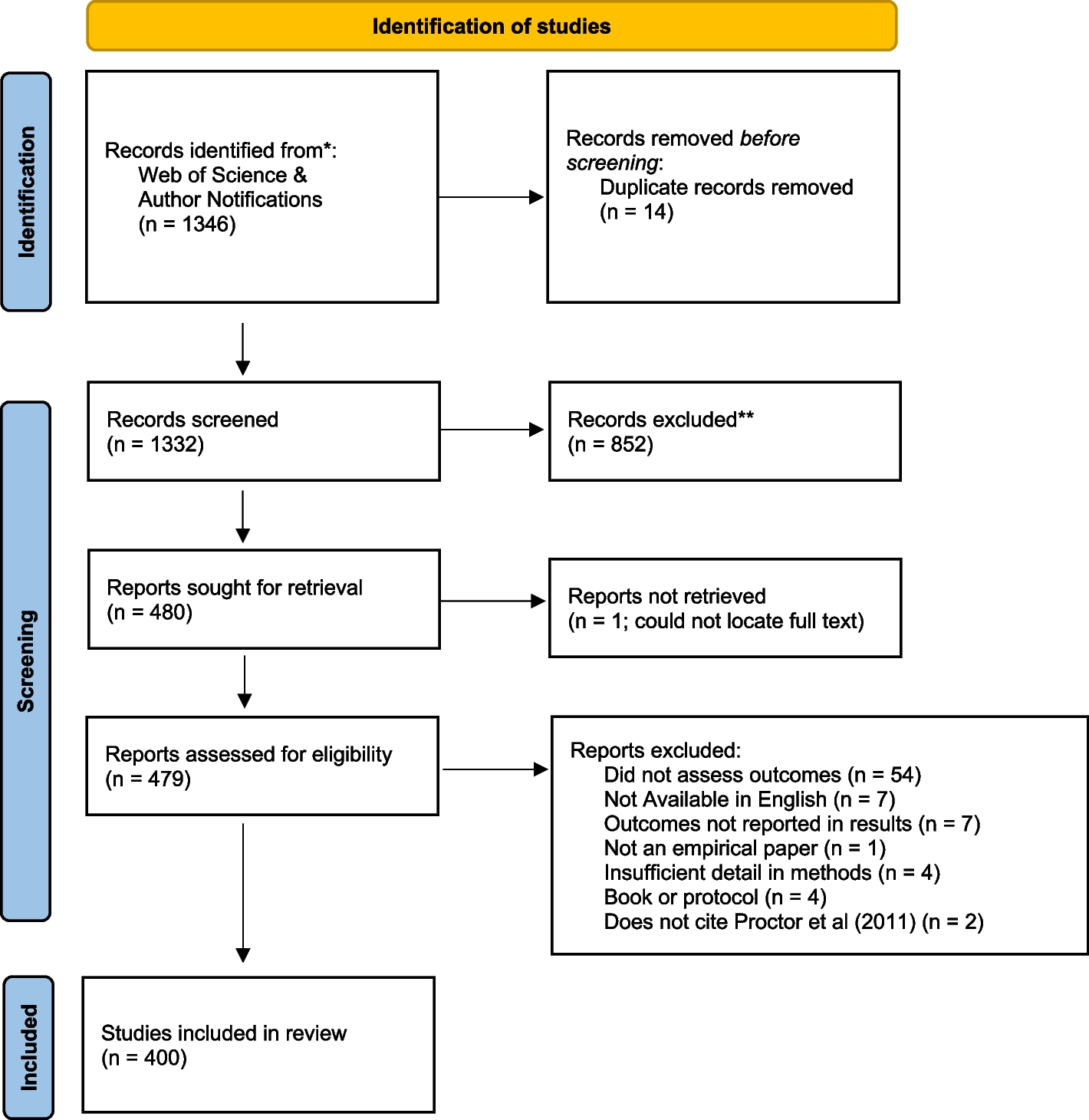

In order to address these problems, implementation scientists focus on changing “implementation outcomes”. You’ll often see people refer to the eight outcomes as laid out by Proctor et al.:

- Acceptability

- Adoption (or uptake)

- Appropriateness

- Feasibility

- Fidelity

- Implementation cost

- Penetration

- Sustainability

By focusing on improving these outcomes, implementation scientists can help to increase the uptake of evidence-based practices, and in turn drive significant improvements for communities. As more and more attention is being paid to the research-practice gap and increasing the efficiency and impact of research, implementation science continues to grow and be recognized as a vital part of the research ecosystem.

Learn more about implementation outcomes here.

If you want to learn more about other topics, visit: https://www.proofshift.com/implementation-science/ and select a topic you are interested in exploring in more detail.

Sources: